- What it is: Neuromorphic (aka brain‑inspired) computing rearranges chips so memory sits with compute, slashing data movement—the main energy drain in today’s AI. IBM calls this “in‑/near‑memory” design, and it’s at the core of its NorthPole and analog “Hermes” research chips. IBM Research

- Why now: AI electricity demand is exploding. The IEA projects global data‑centre power use will roughly double by 2030—with AI the biggest driver—putting efficiency front and centre. IEA

- Who’s leading: IBM’s NorthPole shows record inference speed/efficiency by tightly coupling memory and compute; Intel’s Hala Point stitches 1,152 Loihi 2 chips into the world’s largest neuromorphic system for research at Sandia. IBM Research

- Edge to cloud: Event‑based cameras from Sony/Prophesee only send “changes,” enabling ultra‑low‑power, low‑latency vision at the edge; near‑memory designs like NorthPole attack data‑centre inference bottlenecks. Sony Semiconductor

- What’s not solved: Training directly on analog/memristor hardware is still maturing; toolchains and benchmarks are fragmented—though a new, community‑driven NeuroBench standard is emerging. Nature

- 2026 outlook: Expect more pilot deployments in event‑based perception, robotics, and “AI appliances” for LLM inference; ecosystem access is expanding via Europe’s EBRAINS platforms (BrainScaleS, SpiNNaker). ebrains.eu

What is neuromorphic (brain‑inspired) computing?

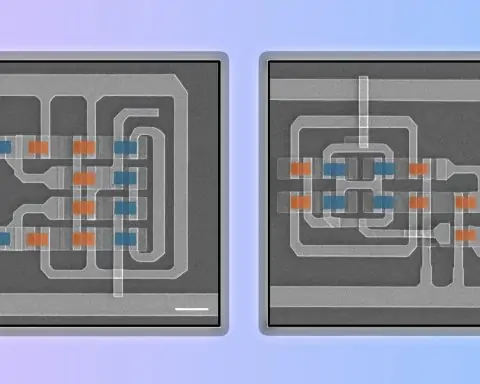

Neuromorphic computing borrows from the brain’s playbook: it co‑locates memory and computation to avoid the von‑Neumann “traffic jam” of shuttling data between separate chips. IBM describes this shift as in‑memory (analog crossbars that both store and compute) or near‑memory (digital cores wrapped around fast on‑chip memory). “In‑memory computing minimizes or reduces to zero the physical separation between memory and compute,” says Valeria Bragaglia of IBM Research. IBM Research

That architectural change matters because most AI work is vast matrix multiplications—simple math done trillions of times. When the weights sit on‑chip next to compute, latency and energy plummet. IBM’s overview explains how both its analog Hermes chip (phase‑change memory crossbars) and the digital NorthPole prototype use this principle to beat the memory wall. IBM Research

Why now: AI’s energy curve is unsustainable

AI’s rise collides with electricity realities. The International Energy Agency now expects data‑centre power demand to more than double by 2030 to roughly 945 TWh, with AI the main accelerant. That’s why architectures that cut data movement—not just crank FLOPs—are in the spotlight. IEA

Intel’s neuromorphic lead Mike Davies puts it bluntly: “The computing cost of today’s AI models is rising at unsustainable rates.” His team built Hala Point, a 6U cluster with 1,152 Loihi 2 processors (≈1.15B neurons, 128B synapses) to explore more sustainable, event‑driven AI at Sandia National Labs. Intel Corporation

State of play: headline platforms to know

- IBM NorthPole (digital, near‑memory): In new tests on a 3‑billion‑parameter LLM, a 16‑card NorthPole server achieved <1 ms/token and 46.9× lower latency than the next most energy‑efficient GPU, while being 72.7× more energy‑efficient than the next lowest‑latency GPU. Earlier, NorthPole was 25× more energy‑efficient than widely used 12‑nm GPUs on vision tasks. IBM Research

Context: A 2025 Nature Communications perspective classifies NorthPole as a “near‑memory efficient sparse tensor processor,” not an SNN or analog compute‑in‑memory device—underscoring that “neuromorphic” spans several hardware styles. Nature - Intel Loihi 2 / Hala Point (digital, spiking): Hala Point aggregates 140,544 neuromorphic cores into a 1.15B‑neuron system with up to 15 TOPS/W efficiency on conventional DNNs, designed for real‑time workloads and continuous learning research. Intel Corporation

- Event‑based vision (edge): Sony and Prophesee co‑developed stacked sensors that output only pixel changes with microsecond latency and very low power—ideal for robotics, AR, and “always‑on” devices. Prophesee details the asynchronous, sparse data flow that mirrors retinal processing. Sony Semiconductor

- EBRAINS access (Europe): Researchers and startups can remotely run spiking networks on BrainScaleS (analog/mixed‑signal, up to 10,000× faster than real time) and SpiNNaker (digital ARM many‑core). The EU’s Human Brain Project ended in 2023, but EBRAINS keeps the neuromorphic platforms online. ebrains.eu

How it works (without the jargon)

- Near‑memory digital (e.g., NorthPole): Keep weights on the chip next to compute; schedule data so almost nothing leaves the package. Result: big latency and energy wins for inference, including LLMs at modest scales. IBM Research

- Spiking/event‑driven (e.g., Loihi): Neurons only fire when something changes; networks stay mostly quiet. This maps naturally to event cameras and control loops where latency and power matter most. Intel Corporation

- Analog in‑memory (e.g., PCM crossbars): Resistive devices store weights and perform multiply‑accumulate with physics (Ohm’s law). Great for inference; on‑chip training remains an active research area. IBM Research

What experts say

“In‑memory computing minimizes or reduces to zero the physical separation between memory and compute.” — Valeria Bragaglia, IBM Research. IBM Research

“We want to learn from the brain… in a mathematical fashion while optimizing for silicon.” — Dharmendra Modha, IBM Fellow (NorthPole). IBM Research

“The computing cost of today’s AI models is rising at unsustainable rates.” — Mike Davies, Intel Labs (Loihi/Hala Point). Intel Corporation

What’s ready now—and what isn’t

Ready or near‑ready

- Edge perception where every milliwatt counts: event‑based cameras + spiking/near‑memory accelerators for industrial monitoring, drones, and wearables. Sony Semiconductor

- Low‑latency inference “appliances” for LLMs ~1–3B parameters, using near‑memory designs that pipeline layers across cards instead of hammering off‑chip DRAM. IBM Research

- Open access to platforms: EBRAINS lets teams prototype on BrainScaleS and SpiNNaker without buying hardware. ebrains.eu

Still maturing

- On‑device training with analog arrays: Programming weights precisely (with drift/noise) is hard; 2024–2025 studies show algorithmic progress but not broad production readiness. Nature

- Tooling and benchmarks: Fragmented software made cross‑chip comparisons difficult; NeuroBench (2025) is the first widely backed attempt at standard, hardware‑aware benchmarks. Nature

Market reality check

Forecasts diverge wildly because definitions vary (sensors vs processors; research vs revenue hardware). MarketsandMarkets pegs neuromorphic computing at $28.5M (2024) rising to $1.33B by 2030; Grand View Research counts a broader scope and projects $20.27B by 2030. Treat sizing with caution and watch deployments, not just headlines. MarketsandMarkets

12–24 month outlook (our base case)

- Edge first: Expect event‑driven perception to land in more robots, factory QA cameras, and battery‑powered devices, where sparse signals translate directly into battery life. Sony Semiconductor

- Inference “appliances” for enterprise LLMs: near‑memory designs (NorthPole‑like) packaged as PCIe cards/2U blades for sub‑10B‑parameter models and agentic workflows with strict latency/energy SLAs. IBM Research

- Open infrastructure: EBRAINS continues to lower barriers; large labs will publish more Hala Point and SpiNNaker results that stress real‑time, on‑line learning and control. Intel Corporation

- Software/standards: NeuroBench adoption accelerates; expect progress on common IRs and Python‑friendly stacks that make neuromorphic hardware feel “GPU‑like” to ML engineers. Nature

Risks & unknowns

- Programming model lock‑in: Without CUDA‑grade tooling, adoption stalls. Standards (benchmarks, IRs, APIs) are crucial. Nature

- Analog device variability: Drift/noise/endurance must be tamed for training at scale; promising, but unresolved. Nature

- Hype vs. watts: Vendor numbers are improving, but independent, workload‑level measurements—especially for LLMs and agents—will determine winners. (Nature and Science reporting on NorthPole provides early, third‑party context.) Nature

Bottom line

Neuromorphic computing is not one chip—it’s a family of brain‑inspired designs aimed at the same pain point: moving bits is what burns watts. With AI’s power draw set to double data‑centre electricity use this decade, designs that keep weights by the compute (and only compute on events that matter) look poised to graduate from lab demos to targeted, real‑world systems. Or as IBM’s Modha frames it, the goal is to learn from the brain “in a mathematical fashion while optimizing for silicon.” Watch edge vision first—then inference appliances that trim latency and energy without rewriting your entire AI stack. IEA

Sources & further reading

- IBM Research explainer on neuromorphic computing; quotes and architecture details (NorthPole, Hermes). IBM Research

- IBM NorthPole results for LLM inference and prior vision benchmarks. IBM Research

- Intel’s Hala Point press materials (specs, Mike Davies quote); Sandia deployment context. Intel Corporation

- IEA analysis on data‑centre and AI electricity demand to 2030. IEA

- Nature Communications perspectives: NorthPole as near‑memory tensor processor; NeuroBench benchmarking framework. Nature

- Sony/Prophesee event‑based sensor releases and technical explainer. Sony Semiconductor

- EBRAINS access to BrainScaleS and SpiNNaker neuromorphic platforms. ebrains.eu