- An Italian team evaluated ChatGPT on pathology problems across 10 subspecialties; only 32.1% of answers were error‑free—~68% contained at least one error. PubMed

- The bot’s citations were shaky: of 214 references, 12.1% were inaccurate and 17.8% were entirely fabricated (“hallucinated”). PubMed

- The research, published in Virchows Archiv (European Journal of Pathology), judged answers “useful” in 62.2% of cases but still unsuitable for routine diagnostics. PubMed

- Italian outlets and Polish media amplified the finding, noting misdiagnoses in skin and breast cancer cases and quoting the lead author’s caution that “the clinician’s eye remains irreplaceable.” ANSA.it

- Other peer‑reviewed studies paint a mixed picture: top chatbots can score ~66–72% on oncology multiple‑choice questions but ~32–38% when graded on free‑text rationales. JAMA Network

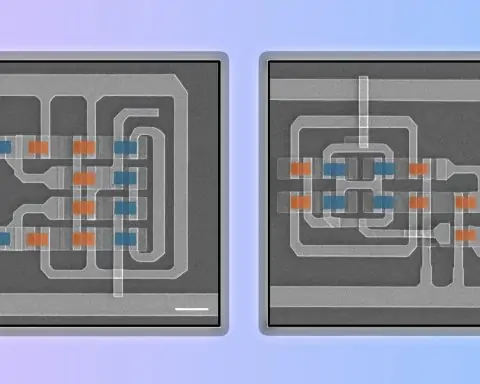

- Specialized medical LLMs (e.g., Google’s Med‑PaLM 2, AMIE) show substantial gains and can improve clinicians’ differential diagnoses in controlled settings—but remain pre‑regulatory research tools. Nature

- Regulators are tightening guardrails: the EU AI Act entered into force on Aug. 1, 2024 (with staged obligations through 2026–2027), and the U.S. FDA issued AI/ML device guidance and GMLP principles in 2025. U.S. Food and Drug Administration

What the new study actually found

Researchers from Humanitas University and Humanitas Research Hospital in Milan tested ChatGPT with clinico‑pathological scenarios spanning ten subspecialties. Pathologists scored the outputs for usefulness and errors. The headline results:

- Useful in 62.2% of cases

- Error‑free in 32.1% (i.e., ~67.9% had ≥1 error)

- Citations: 70.1% correct, 12.1% inaccurate, 17.8% non‑existent

The paper concludes that, despite some utility, performance variability and errors “underscore [ChatGPT’s] inadequacy for routine diagnostic use” and emphasize the “irreplaceable role of human experts.” PubMed

Polish outlet WirtualneMedia, summarizing Italian press reports, added examples of misdiagnosed skin cancer and breast cancer, and relayed lead author Vincenzo Guastafierro’s warning: “The clinician’s eye remains irreplaceable. AI should be support, not a substitute.” Wirtualne Media

Important caveat

This was a text‑only chatbot evaluated on pathology reasoning—not an FDA‑cleared imaging algorithm or a regulated clinical decision‑support tool. The “~70% wrong” figure means “contains at least one error,” not “totally wrong diagnosis every time.” Scope matters. PubMed

How this compares with other recent evidence

- Oncology vignettes (JAMA Network Open, 2024): In 79 cancer cases, top models reached ~66–72% accuracy on multiple‑choice questions, but accuracy dropped to ~32–38% when experts graded free‑text answers—illustrating how evaluation format swings results. JAMA Network

- Med‑PaLM 2 (Nature Medicine, 2025): A domain‑tuned medical LLM achieved 86.5% on USMLE‑style questions and, in a pilot, specialists sometimes preferred its answers for real‑world queries—promising but still a research setting. Nature

- AMIE (Nature, 2025): An LLM optimized for diagnostic reasoning improved clinicians’ differential diagnosis lists in randomized comparisons against standard search tools (higher top‑n accuracy, more comprehensive DDx). Nature

- Evidence synthesis (npj Digital Medicine, 2025): A pipeline called TrialMind boosted recall and cut screening time in systematic reviews when used with humans, underlining the value of human‑AI collaboration rather than replacement. Nature

Bottom line: Performance varies widely by task (multiple‑choice vs. free‑text), tuning (general vs. medical‑specific models), and workflow (stand‑alone bot vs. clinician‑in‑the‑loop). The Milan team’s findings highlight risks precisely where errors are most consequential—diagnostic reasoning. PubMed

Expert voices

- Vincenzo Guastafierro, MD (Humanitas): “The clinician’s eye remains irreplaceable… AI should be support, not a substitute.” (translation) Wirtualne Media

- Jesse M. Ehrenfeld, MD, MPH (AMA): For AI in health care to succeed, “physicians and patients have to trust it… and we have to get it right.” American Medical Association

Why it matters for patients and clinicians

- Patients: Chatbots can be useful for education, but shouldn’t be used to self‑diagnose. Ask for sources, and treat AI output as a starting point for a conversation with a clinician. American Medical Association

- Clinicians: Treat general‑purpose LLMs like an unvetted junior assistant—helpful for brainstorming or drafting, but requiring verification against guidelines, the chart, and your judgment. PubMed

- Hospitals/health systems: Gains come when AI is embedded into workflows with guardrails—retrieval‑augmented generation (to curb hallucinations), uncertainty flags, audit logs, and human oversight. Nature

Regulatory context (what changes next)

- European Union: The EU AI Act entered into force Aug. 1, 2024. Bans on “unacceptable risk” AI apply early; general‑purpose AI rules phase in around Aug. 2025; high‑risk obligations (which will touch many medical uses) phase in over 2026–2027. Expect stricter documentation, risk management, and post‑market monitoring. European Commission

- United States: The FDA has published Good Machine Learning Practice principles and draft guidance on AI‑enabled device software functions (life‑cycle management, transparency, bias mitigation, and Predetermined Change Control Plans for learning systems). U.S. Food and Drug Administration

Implication: Diagnostic chatbots will face much tighter evidence and transparency requirements before clinicians can rely on them in routine care. European Parliament

Forecast: What to expect over the next 12–24 months

- From “general” to “medical‑grade”: Expect more domain‑tuned LLMs (like Med‑PaLM 2, AMIE‑style systems) that combine reasoning with retrieval of vetted medical sources. Early studies suggest better safety and acceptance, but clinical validation remains the hurdle. Nature

- Clinician‑in‑the‑loop by design: Hospitals will prioritize tools that augment, not replace, clinicians—e.g., generating differential lists, citing guidelines, and flagging uncertainty—because that’s where regulators and professional bodies are pointing. American Medical Association

- Proof over promise: Procurement will shift toward measurable outcomes (reduced time, improved recall, fewer near‑misses) demonstrated in prospective studies—not just benchmarks. Evidence‑synthesis tools foreshadow this direction. Nature

- Hardening against hallucinations: Vendors will race to add RAG, calibrated uncertainty, and source‑linking. Studies documenting fabricated citations (including in the Milan work) make this a near‑term must‑fix. PubMed

What you should do now

- If you’re a clinician:

- Use AI only as an adjunct; verify against guidelines and the patient record.

- Prefer tools that show sources (with working links) and report confidence/uncertainty.

- Document your oversight; don’t copy‑paste AI text into the chart without review. American Medical Association

- If you’re a patient:

- Treat chatbot answers as educational—not medical advice.

- Bring AI outputs to your appointment and ask your clinician to confirm or correct them. American Medical Association

Methodology notes (to keep the “70%” in perspective)

The Milan study evaluated pathology reasoning in text (no microscope images), using synthetic but guideline‑conformant scenarios reviewed by specialists. Six pathologists rated answers and citations. The ~70% figure reflects any error present, not necessarily an incorrect final diagnosis every time. That nuance explains why other studies can report higher multiple‑choice accuracy yet much lower accuracy for free‑text clinical reasoning. PubMed

Sources

Primary study and media coverage: Virchows Archiv (European Journal of Pathology) abstract; ANSA; WirtualneMedia. Context and counter‑evidence: JAMA Network Open (oncology cases), Nature Medicine (Med‑PaLM 2), Nature (AMIE), npj Digital Medicine (TrialMind). Policy: European Commission / Parliament on the EU AI Act; FDA guidance & GMLP. U.S. Food and Drug Administration

Editor’s note: This article is for a general audience and does not constitute medical advice. Always consult a qualified clinician for diagnosis and treatment.