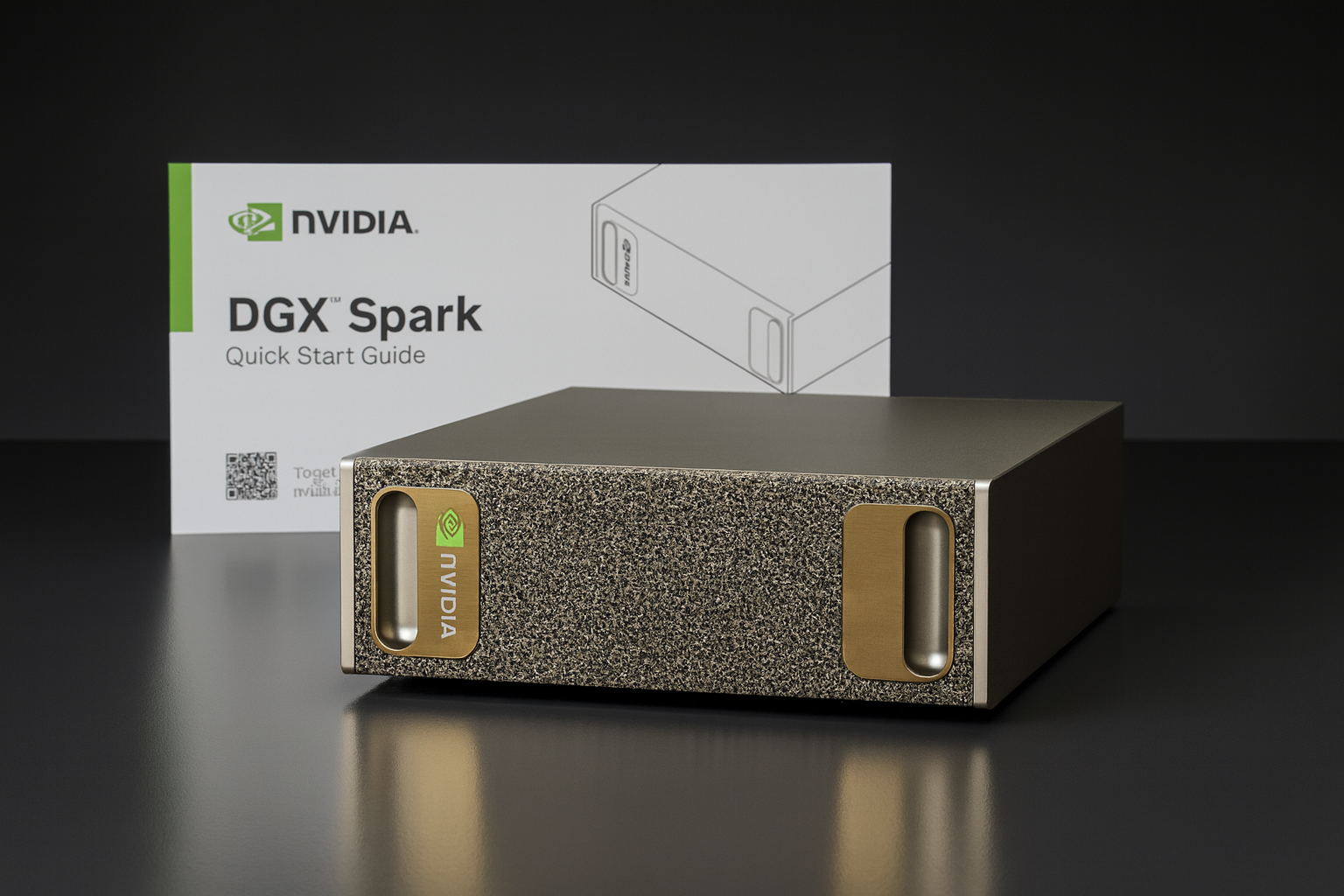

- What is DGX Spark? A mini AI computer (150×150×50.5 mm; 1.2 kg) built on the GB10 Grace Blackwell chip, with 128 GB of unified CPU‑GPU memory, up to 4 TB NVMe, powered by a 240 W PSU. NVIDIA claims up to 1 PFLOP of AI performance in FP4 precision. I/O includes 10 GbE RJ‑45 and two QSFP56 200 Gb/s (ConnectX‑7) ports to interconnect multiple units.

- Price and availability. List price $3,999; sales started October 15, 2025 via NVIDIA.com and partners.

- Who is it for? NVIDIA targets researchers, developers, and students — a “personal AI supercomputer” for the desk, not a gaming rig.

- Where it came from. The product evolved from Project DIGITS shown at CES 2025; the name changed to DGX Spark at launch.

- Models and scaling. A single unit can work locally with models up to 200 B parameters (via FP4/NVFP4); two units linked over 200 GbE can handle ~405 B parameters.

- Software. Ships with DGX OS and the full NVIDIA AI stack (CUDA, NIM, NGC, “Playbooks,” and the Sync app for remote development).

- OEM partners. Acer, ASUS, Dell, Gigabyte, HP, Lenovo, and MSI are preparing GB10‑based variants.

- Controversies. In FP8/FP16 early comparisons, Spark doesn’t always beat cheaper mini‑PCs based on AMD Strix Halo — its edge is FP4/NVFP4, 128 GB unified memory, and a ready‑to‑use software stack.

Introduction: “A Petaflop in Your Palm” — What NVIDIA Is Really Proposing

NVIDIA’s DGX Spark arrives as “the smallest AI supercomputer” — a lunchbox‑sized device for your desk that runs off a standard wall outlet. Jensen Huang ceremonially delivered the first units to Elon Musk at SpaceX and Sam Altman at OpenAI, quipping about “the smallest supercomputer next to the biggest rocket.”

Behind the marketing is real tech: GB10 Grace Blackwell combines a 20‑core Arm CPU (10× Cortex‑X925 + 10× Cortex‑A725) with a next‑gen Blackwell GPU featuring 5th‑gen Tensor Cores and hardware support for FP4/NVFP4. That’s what underpins the “up to 1 PFLOP” figure — in FP4, where 4‑bit quantization of weights/activations dramatically increases throughput.

Hardware: From Chassis to 200 Gb/s Networking

At the heart of Spark is GB10, paired with 128 GB of LPDDR5x unified memory visible to both CPU and GPU — which helps with very large models by avoiding slow RAM↔VRAM shuffling. The 150×150×50.5 mm enclosure also houses up to 4 TB of self‑encrypting NVMe and an I/O set that includes four USB‑C ports, HDMI 2.1a, 10 GbE RJ‑45, Wi‑Fi 7/Bluetooth 5.4, and — crucially — two QSFP56 ports driven by ConnectX‑7 200 Gb/s for clustering and RDMA. Power draw tops out at 240 W.

Where a typical mini‑PC might stop at 2.5 GbE for networking, Spark’s 200 Gb interconnect lets you lash two (or more) boxes into a single logical machine — hence the two‑node scenario for ~405 B‑parameter models.

Where the “Petaflop” Comes From: A Quick FP4/NVFP4 Primer

The headline “up to 1 PFLOP” refers to FP4 performance — a 4‑bit floating‑point format implemented in hardware on Blackwell (with NVFP4 adding block‑wise micro‑scaling and automation in Tensor Cores). The result is much smaller memory footprints and higher throughput than FP8/FP16 while maintaining quality for many inference workloads.

NVIDIA’s guidance shows NVFP4 can shrink model size ~3.5× vs FP16 and ~1.8× vs FP8 with minimal accuracy loss — which is what makes 200B local models feasible without HBM or a server room.

Software & Developer Experience

Just as important as the hardware is the environment: DGX OS, curated NGC containers, NVIDIA NIM (microservices for inference), AI Blueprints/Playbooks (step‑by‑step recipes), and the Sync app that exposes Spark to your IDE (VS Code, Cursor) as a remote workstation. Early reviewers highlight smooth onboarding and sensible curation of starter materials.

Developer and open‑source author Simon Willison calls Spark a “snazzy little computer,” while noting that the ARM64 + CUDA ecosystem is still maturing — “great hardware, early days for the ecosystem,” though docs and containers are improving rapidly.

What Experts Are Saying (Quotes)

- Jensen Huang (NVIDIA): “Placing an AI supercomputer on the desks of every data scientist…” — a vision of a personal supercomputer for mainstream AI creators.

- Kyunghyun Cho (NYU): “DGX Spark allows us to access peta‑scale computing on our desktop…,” accelerating prototyping and private research experiments.

- Jensen Huang (on the SpaceX hand‑off): “…the smallest supercomputer next to the biggest rocket.”

- HotHardware: “128 GB of fast memory is more than any consumer GPU.”

- ServeTheHome (Patrick Kennedy): “This is so cool,” pointing out that 200 GbE QSFP56 is key to Spark’s value.

- Level1Techs (forum): “Not a product you benchmark — it’s one you build with.”

- PC Gamer (on Blackwell’s edge): “Blackwell has hardware support for FP4.”

- Inc.com (on positioning): “It won’t play video games.”

Performance and Value: What Do the Numbers Mean?

This is where things get interesting — and contentious. PC Gamer compared the $3,999 Spark with an AMD Strix Halo mini‑PC around $2,348. In FP8/FP16 tests, results were sometimes similar, which raises ROI questions if you look at raw numbers only. On the other hand, commentators stress that Spark’s strength lies in FP4/NVFP4, 128 GB unified memory, and an integrated software stack — not in chasing benchmark records in higher precisions.

HotHardware suggests treating Spark as a “developer companion piece” — a remote‑controlled sidecar to your main workstation — not as a data‑center‑class dGPU replacement. They also highlight the convenience of NVIDIA Sync and the quality of the Playbooks.

In short: if your workflows leverage FP4/NVFP4, need large unified memory (128 GB), and you prefer local development over cloud dependence, Spark shines. If you’re optimizing strictly for $→tok/s in FP16/FP8, a consumer dGPU build or Strix Halo mini‑PC may be cheaper — with less memory and less integrated tooling.

Use Cases That Make Sense Right Now

- Local LLMs and agentic prototypes (RAG, multimodal, coding), with a focus on privacy and no cloud dependency.

- On‑desk computer vision (ComfyUI/Roboflow, segmentation, visual search).

- Robotics and “physical AI” — fast experiment loops without queueing for cluster time.

- Education — an “out‑of‑the‑box to working agent” environment that helps you learn FP4, quantization, and container orchestration.

Keep in mind that “supercomputer” here is AI‑marketing shorthand — this is not a TOP500‑class machine. For context, the U.S. El Capitan supercomputer delivers 2.79 exaFLOPS (FP64/AI) and is not a consumer product. Spark earns the “desktop supercomputer” label through architecture and software, not sheer power draw or liquid cooling.

Availability & Ecosystem

Sales began October 15, 2025. Beyond NVIDIA’s own (often “out of stock”) configuration, partner versions (Acer, ASUS, Dell, Gigabyte, HP, Lenovo, MSI) are in the pipeline. The concept remains consistent — GB10 + 128 GB + 200 GbE — with potential differences in firmware, service, and configuration details.

Verdict: Who Should Buy DGX Spark?

- Yes — if you’re a researcher/developer who values local work with large models (FP4/NVFP4), 128 GB of unified memory, and a turnkey NVIDIA AI stack.

- Consider carefully — if you’re chasing the best $‑per‑token/s in FP8/FP16: a Strix Halo mini‑PC or a consumer‑GPU workstation might be more economical, at the cost of memory capacity and integration.

- No — if you want a gaming PC. As Inc. aptly notes: “It won’t play video games.”

Ultimately, DGX Spark is a tool to build with: “not a product you benchmark — it’s one you build with.” As a “petaflop in your palm”, it genuinely could accelerate the democratization of AI beyond the server room.

Sources, Specs & Further Reading (selection)

- Product page and specs: NVIDIA DGX Spark (dimensions, weight, memory; formerly Project DIGITS).

- Marketplace card (price, “A Grace Blackwell AI Supercomputer on your desk”).

- Full spec/support (20‑core Arm CPU, ConnectX‑7 200 Gb/s, 240 W).

- CES announcement (Project DIGITS, 200B, 405B, product mission).

- NVIDIA blog (first deliveries, “supercomputer in the palm of your hand,” partner list).

- The Verge — sale date, price, “personal AI supercomputer.”

- Tom’s Hardware — coverage of deliveries to Musk/Altman, market context.

- PC Gamer — comparison with AMD Strix Halo, FP8/FP16 value takeaways.

- HotHardware — “developer companion piece,” Playbooks/Sync, benefits of 128 GB unified memory.

- ServeTheHome — port breakdown (including QSFP56 200 GbE), hands‑on impressions.

- Simon Willison — developer review (ARM64+CUDA ecosystem maturing).

- FP4/NVFP4: Blackwell architecture brief (5th‑gen Tensor Cores) and NVFP4 technical blog.