- Helix is Figure’s in‑house Vision–Language–Action (VLA) model that converts pixels + words directly into dexterous robot actions—controlling the entire humanoid upper body at 200 Hz with 35 DoF hands, wrists, torso, head. FigureAI

- In 2025, Figure showed hour‑long autonomous logistics runs and then household feats: folding laundry (a first for multi‑fingered humanoids) and loading a dishwasher—all with the same model, no new algorithms, just new data. FigureAI

- Fresh today (Sep 16, 2025): Figure raised $1B+ Series C at a $39B valuation; CEO Brett Adcock says the capital will “scale out our AI platform Helix” and manufacturing. Reuters

What is Helix (VLA)?

Helix is a generalist “pixels‑to‑actions” model that runs entirely onboard Figure’s humanoid robots. It unifies perception, language understanding, and learned control so the robot can see a scene, understand a spoken/text instruction, and execute dexterous bimanual motions in real time. Helix uses a two‑part design:

- System 2 (S2): an internet‑pretrained VLM (~7B parameters) that “thinks slow” at 7–9 Hz for semantics and language.

- System 1 (S1): an 80M‑parameter visuomotor policy that “thinks fast” at 200 Hz, producing smooth, continuous control for the entire upper body.

Both run concurrently on dual embedded GPUs inside the robot. FigureAI

“Helix is a first‑of‑its‑kind ‘System 1, System 2’ VLA for high‑rate, dexterous control of the entire humanoid upper body.” FigureAI

Training data & recipe. Figure reports about ~500 hours of high‑quality teleoperation across multiple robots/operators. Instructions are auto‑labeled with a VLM that writes “hindsight” commands for each clip (e.g., what prompt would have produced this behavior?). The same single set of weights covers many skills; no task‑specific heads or per‑task fine‑tuning. FigureAI

What Helix can do today (2025)

1) Zero‑shot household manipulation

- Collaborative grocery put‑away: two robots run the same weights and coordinate handoffs via language prompts.

- Pick up anything: robust language‑to‑grasp behavior across thousands of novel objects. FigureAI

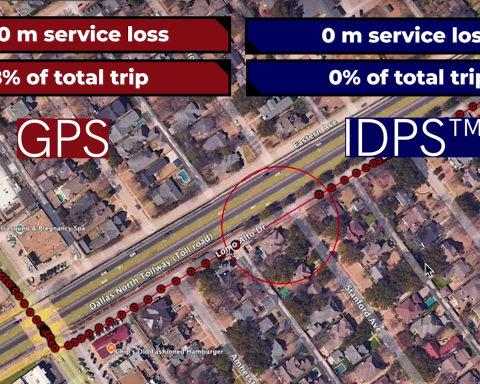

2) Logistics (moving packages on belts, orienting labels)

- After initial deployment, Figure scaled data and added vision memory, state history, and force feedback (“touch”). Results: ~20% faster handling (≈ 4.05 s per package) and ≈95% barcode‑orientation success—approaching human‑level throughput. FigureAI

3) Laundry folding (deformable‑object dexterity)

Figure calls this “a first for humanoids” with multi‑finger hands—executed by the same Helix architecture used in logistics; no hyperparameter changes, new dataset only. Skills include edge‑tracing, corner‑pinching, smoothing, recovery from slips. FigureAI

“A first for humanoids… folding laundry fully autonomously using an end‑to‑end neural network.” FigureAI

4) Dishwasher loading (bimanual, precise)

The very same Helix that sorted packages and folded towels now loads dishes—singulating stacked plates, bimanual glass reorientation, centimeter‑tolerance placement, and graceful recovery from misgrasps. Figure stresses no new algorithms—“just new data.” FigureAI

“No new algorithms, no special‑case engineering, just new data.” FigureAI

5) Walking (separate locomotion controller)

In parallel with Helix, Figure trained a reinforcement‑learning walking policy in simulation that transfers zero‑shot to real robots for natural gait; recent tests probed robustness (e.g., reduced vision). FigureAI

2025 timeline at a glance (latest first)

- Sep 16 — $1B+ Series C at $39B post‑money; funds earmarked to scale Helix, build compute, and accelerate data collection. “This milestone is critical… scaling out our AI platform Helix and BotQ manufacturing.” —Brett Adcock. Reuters

- Sep 3 — Helix loads the dishwasher (same model; data‑only extension). FigureAI

- Aug 12 — Helix folds laundry (multi‑finger, end‑to‑end). FigureAI

- Jun 7 — Scaling Helix in logistics: vision memory, state history, force sensing; 4.05 s/package, ≈95% barcode success. FigureAI

- Feb 26 — Helix in logistics; S1 upgrades incl. implicit stereo, multi‑scale vision, self‑calibration, and a “sport mode.” FigureAI

- Feb 20 — Helix introduced; first VLA to directly control a humanoid’s full upper body, run entirely onboard low‑power GPUs, and multi‑robot collaboration with one model. FigureAI

How Helix compares to other VLAs

The VLA paradigm was popularized in 2023 with Google DeepMind’s RT‑2, which showed that web‑scale vision‑language pretraining can be fine‑tuned for robot actions. Helix follows the same vision→language→action idea but pushes continuous, high‑rate, high‑DoF humanoid control and onboard deployment. Google DeepMind

- RT‑2/Gemini Robotics: strong semantic generalization; typically discretized actions or lower‑DoF setups; Google has since announced Gemini Robotics as a broader embodied stack. The Verge

- Helix: end‑to‑end continuous control at 200 Hz over hands + torso + head, bimanual collaboration, multi‑robot operation; one weight set for many behaviors. FigureAI

Under the hood (for the technically curious)

- Architecture: S2 (VLM, ~7B) emits a latent goal vector; S1 (visuomotor transformer, 80M) fuses that latent with multi‑scale visual features + robot state to output continuous wrist poses, finger motions, head/torso targets at 200 Hz. FigureAI

- Training: end‑to‑end regression; gradients flow from S1 into S2 via the latent; temporal offset during training mirrors asynchronous inference (S2 slow, S1 fast). FigureAI

- Deployment: model‑parallel on dual embedded GPUs; S2 updates a shared latent in the background; S1 closes the control loop in real time. FigureAI

- Scaling (logistics): added vision memory, state history, force feedback (touch) to boost robustness and throughput with more demonstration data. FigureAI

Funding, factory, and route to scale

- Capital for Helix: Today’s round takes Figure to $39B valuation; funds go to Helix scaling, GPU infrastructure, and large‑scale data collection. Reuters

- Manufacturing (BotQ): Figure’s BotQ plant targets up to 12,000 humanoids/year initially; strategy includes robots building robots, with Helix assisting factory tasks. FigureAI

- Battery (F.03): a 2.3 kWh pack enabling ~5 hours runtime, fast‑charge at 2 kW, and stringent UL/UN safety targets—key to longer Helix sessions. FigureAI

Strategic context & debate

- CEO outlook. Brett Adcock recently argued humanoids are nearing practical home utility and that Helix now enables hour‑long uninterrupted work with touch and short‑term memory—“approaching human speed and performance.” Business Insider

- Skeptics’ view. AI luminary Fei‑Fei Li cautions that one human‑like form isn’t optimal for all tasks: “requirements… are so vast that… sticking with one form is energy inefficient.” Business Insider

Expert quotes you can use

“Helix is a first‑of‑its‑kind ‘System 1, System 2’ VLA…” FigureAI

“A first for humanoids… folding laundry fully autonomously…” FigureAI

“No new algorithms… just new data.” FigureAI

“This milestone is critical to unlocking the next stage of growth… scaling out our AI platform Helix.” — Brett Adcock Reuters

“Having very few forms… is energy inefficient.” — Fei‑Fei Li Business Insider

FAQ

Is Helix the same thing as the walking controller?

No. Helix handles vision‑language→dexterous manipulation. Walking uses a separate RL policy trained in simulation and transferred to real robots. FigureAI

Did Figure really drop OpenAI to build Helix?

Yes—Figure ended its OpenAI deal in early Feb 2025, saying it made a “major breakthrough” in end‑to‑end robot AI developed in‑house. Decrypt

What makes Helix notable vs. prior VLAs?

High‑rate continuous control of a full humanoid upper body onboard, multi‑robot collaboration, and a track record across logistics + household tasks with data‑only extensions. FigureAI

When will we see home trials?

Figure has publicly discussed accelerated home testing timelines after launching Helix; it has continued to post household task demos through summer 2025. Interesting Engineering

Sources

- Figure technical reports & updates: Helix intro; logistics; scaling; laundry; dishwasher; Series C; BotQ; F.03 battery; RL walking. FigureAI

- News & analysis: Reuters on funding; Business Insider interview; DeepMind RT‑2/Gemini Robotics for context. The Verge, Reuters, Business Insider